How to Train an LLM on Your Own Data: Beginner’s Guide

HeavybitHeavybit

HeavybitHeavybit

Introduction to Training an LLM

Organizations of all sizes and maturity levels are adopting artificial intelligence (AI) quickly. While enterprises are notorious for slower adoption, their retention rates for AI applications are now hitting 63% annually, up from 41% the previous year. So while there are arguably overhyped, generic use cases that could fizzle out, AI is becoming stickier in some places.

If you’re at an organization that’s already leveraging generative AI, you may be tasked with exploring innovative applications for it. Large Language Models (LLMs) offer a range of opportunities, but while pre-trained LLMs like the ChatGPT family from OpenAI and the Llama family from META are excellent for general purposes, there’s a good chance your company will need custom LLM models that are a bit more bespoke.

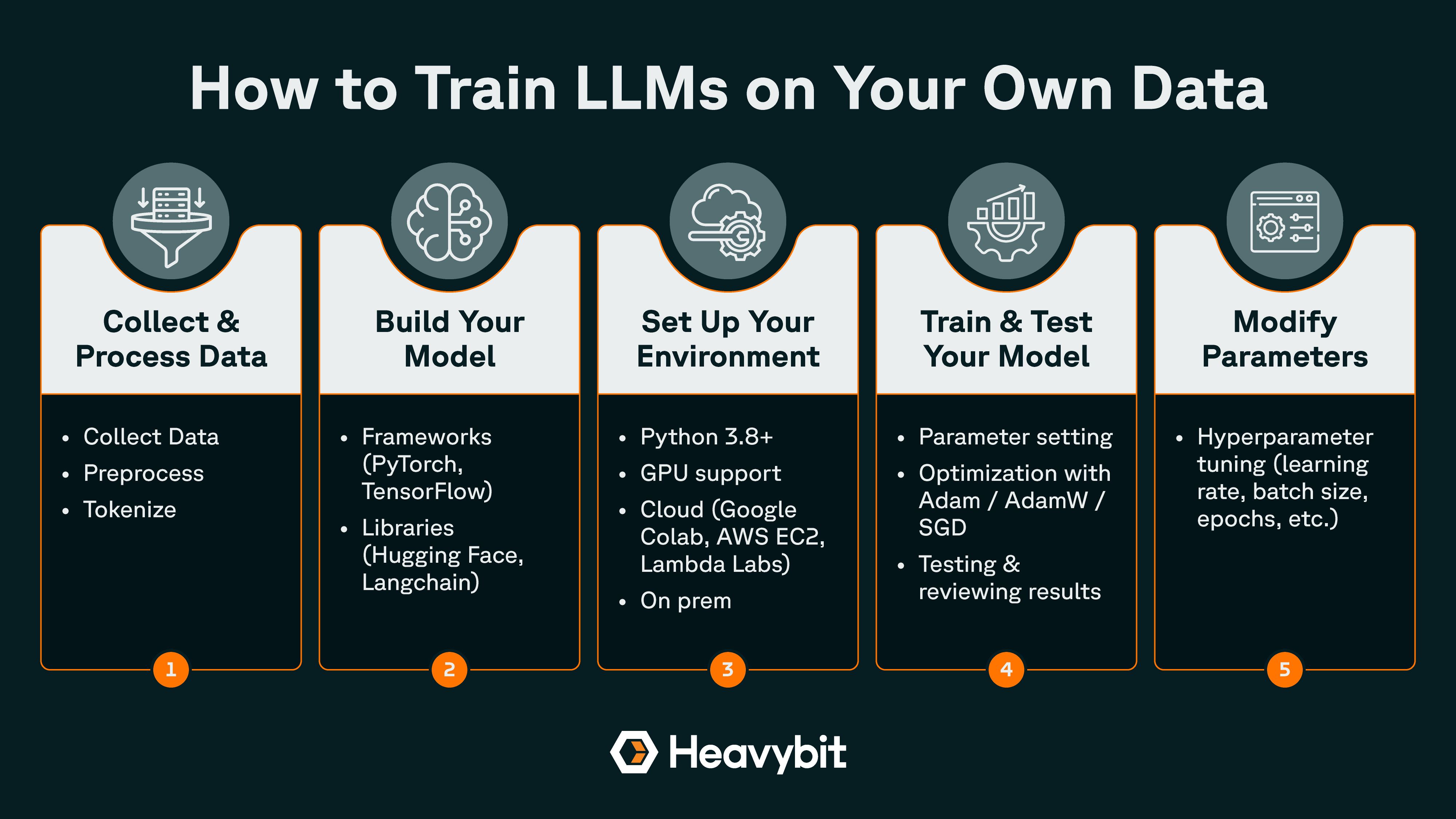

Training LLMs on your own data requires processing, model engineering, setup, testing, and iteration. Click to zoom in on this infographic.

Teams can optimize models for better on-the-fly performance using techniques like retrieval-augmented generation (RAG), or they can take the approach of training their LLM on their own data. (Note that a training project, the initialization of an AI model’s weights against a dataset, differs from a fine-tuning LLM project, which further trains pre-trained models and is often even more costly in terms of computational resources).

Whether you're building a chatbot with expertise fueled by internal documentation, generating domain-specific content, building a natural-language processing (NLP)-focused BERT model for a text data or text generation use case, or enhancing existing models with proprietary knowledge, this tutorial will walk you through the practical steps required to train a language model on your own dataset.

More Resources on LLM Engineering

- Article: LLM Fine-Tuning: A Guide for Engineering Teams in 2025

- Article: RAG vs. Fine-Tuning: What Dev Teams Need to Know

- Article: Synthetic Data for AI: Purpose and Use Cases

- Article: How to Create Data Pipelines

What Is an LLM and Its Key Components?

If you’re reading this, there’s already a good chance you know about LLMs, here’s a brief recap. An LLM is a deep learning model trained to understand and generate human language. LLMs are commonly built on the Transformer architecture, introduced in the seminal "Attention is All You Need" paper.

Key components of an LLM include:

- Tokenization: Breaks inputted text into manageable units/tokens (such as words or subwords) so it’s machine-readable.

- Embedding Layer: Maps tokens to high-dimensional vectors. These embeddings are updated during model training to capture task-specific meaning.

- Positional Encoding: Added to token embeddings to give the model information about the position of each token in the sequence.

- Attention Mechanisms: Allows the model to weigh the relationships between all tokens in a sequence, and dynamically focus on the most relevant components of the context for each token.

- Feed-Forward Networks: Process each position independently, and enrich the model’s capacity to detect and process complex patterns per the model’s algorithms.

- Output Layer: Converts the final token representations into probabilities over the vocabulary, which then generates predictions, such as the next token in a sequence.

When combined, these elements of an LLM create a pipeline that transforms your inputted raw text into outputted meaningful predictions with nuanced understanding and context-aware sensitivity. Now let’s talk about how to apply that to your organization.

Why Train an LLM on Your Own Data?

If you have a common medical problem, such as a cough or headache, you’re probably fine going to see a doctor who is a general practitioner. On the other hand, if there are recurring concerns about specific parts of your body, such as your knees or shoulders, you might want to schedule an appointment with someone who specializes in that field, such as an orthopedic surgeon. The value of your own LLM, trained to focus on your users’ needs, can be seen in a similar way.

While GPT-4, for instance, might be able to provide a broad overview of what your organization does at a high level — based on publicly available information — it’s not going to be able to do so with the same level of granular knowledge that is common amongst your co-workers.

By training an LLM on your own dataset, you can achieve:

Domain Knowledge: General models will struggle with niche terminology or specialized workflows. By training your own LLM on your own specific data tailored to your use case (like support tickets, codebases, or datasheets), you ensure the model internalizes domain-specific vocabulary, abbreviations, and context.

Better Performance: Generic models can be imprecise on narrow tasks. Fine-tuning on task-relevant examples, such as customer questions, code reviews, or internal documentation, significantly boosts accuracy, relevance, and reliability. For use cases where data freshness is critical, updating your data (such as via a data pipeline) using a real-time cadence may be necessary.

Privacy and Control: If you choose to host and train your own LLM on-premise or within a secure cloud environment, you’ll ensure that sensitive, regulated, or proprietary data stays within your infrastructure. This is especially important for industries with compliance requirements or IP concerns.

Customization: By fine-tuning the model's highly-sensitive behavior, you can better match your organization’s brand voice, formatting standards, or response strategies. This leads to a more consistent and aligned user experience across your product or platform.

Training an LLM on your own training data turns a general-purpose tool into a purpose-built solution aligned exclusively with your environment, goals, and constraints. That said, anyone who has successfully built, launched, and maintained in-house tools knows it can all seem easier said than done.

How to Train an LLM on Your Own Data: A Step-by-Step Guide

The success of training your own LLM using training data you own hinges on a variety of factors, but let’s outline the broad steps, using Python and open-source tools where available for simplicity.

1. Collect and Process Your Data

It’s a lot easier and efficient to train your model if your assembled training data is cleaned up and standardized. Below are steps and tools to get high-quality data ready (and build a custom dataset as needed):

Data Collection

Here are a few sources to either sample or start datasets:

For the actual contents of your data, you’ll need to figure out what items make the most sense for your use case, such as:

- Internal documentation

- CRM or support tickets

- Chat logs or Slack messages

If you’re building a customer support LLM, for example, you can start with a dataset like MultiWOZ, which is a collection of multi-domain task-oriented dialogues.

Preprocess Your Data

Once you’ve got your data, you’ll need to prepare it for training. Preprocessing your training data will result in greater efficiency and reduced latency. Here’s what you need to look for:

- Remove duplicates to avoid skewing your model

- Watch for data gaps and remove rows with missing context

- Filter incomplete examples, such as dialogues without responses

- Label your data if needed for supervised fine-tuning

- Eliminate HTML tags and special characters

- Normalize text (lowercasing, removing stopwords, etc.)

Finally, tokenize and convert your text to a numerical format using tools like Hugging Face's tokenizer:

python

from transformers import AutoTokenizer

sample_text = "Hello, how can I help you today?"

tokens = tokenizer(sample_text, padding="max_length", truncation=True, return_tensors="pt")

You may also embed the tokens or assess attention layers later in the model pipeline, but at this stage, it’s a good idea to focus on ensuring your data is in a standardized, clean text form that will play nicely with any API you use to feed data to your model

2. Build Your Model

At the heart of the majority of LLMs is the aforementioned Transformer architecture, but luckily you won’t need to build a full custom LLM from scratch. For example, you can use popular Python frameworks such as PyTorch and TensorFlow, combined with libraries like Hugging Face Transformers or Langchain to work with a prebuilt base model or customize your own.

Here’s an illustrative example of loading a pretrained model using Hugging Face Transformers:

python

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("gpt2")

The above makes sense if you're fine-tuning a full model. For efficient training, consider techniques like LoRA or adapters that let you train only a subset of the parameters.

3. Set Up Your Environment

You’ll need a proper development environment to train and test an LLM, which requires high-performance capabilities. Here are some examples:

- Python 3.8+

- GPU support (e.g., NVIDIA CUDA)

- Libraries: PyTorch, TensorFlow, Hugging Face Transformers, Tokenizers

- Cloud options: Google Colab, AWS EC2 with GPU, Paperspace, or Lambda Labs

- On-prem

Example setup using Hugging Face:

bash

pip install torch transformers datasets

To run on GPU:

python

import torch

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

Step 4: Train and Test the Model

Now comes the actual model training process.

First, set the initial parameters:

- Epochs: Number of passes through the dataset (e.g., 3–5 for small datasets)

- Batch size: Number of samples per batch (e.g., 16 or 32)

- Learning rate: Controls how much the model adjusts per update

Example using Hugging Face's Trainer:

python

from transformers import Trainer, TrainingArguments

training_args = TrainingArguments(

output_dir="./results",

per_device_train_batch_size=16,

num_train_epochs=3,

logging_dir="./logs"

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset

)

trainer.train()

Optimization with Stochastic Gradient Descent (SGD)

Modern LLMs often use Adam or AdamW, which converge faster and handle sparse gradients better than SGD. It may make sense to use SGD only for experimentation or comparison, though if you prefer classic SGD, here’s an example:

python

import torch.optim as optim

optimizer = optim.SGD(model.parameters(), lr=0.01)

Test and Interpret the Results:

Your next steps should include evaluating your model’s loss, perplexity, and task-specific metrics:

python

trainer.evaluate()

To further break down the evaluation criteria, let’s look at each category:

- Perplexity: The exponentiated average loss. This is the go-to metric that tells you how “confused” the model is when predicting the next token. Lower perplexity means more confidence and accuracy in its predictions.

- Loss: How far off the model’s predictions are from the correct answers (ground truth). It's computed during training using a function, which is usually cross-entropy loss for language models.

- Task-Specific Metrics: Measure the model’s performance on the actual job it’s intended to do, rather than just general language ability. For example, If your LLM is summarizing text, you might use ROUGE or BLEU scores. If it’s answering questions, Exact Match (EM) or F1 score might be better. Each metric captures success in a way that generic benchmarks cannot.

As a general rule, during training, you’ll want to measure log loss and perplexity after every epoch/batch. At the validation stage, you’ll calculate all your metrics on a held-out dataset.

Step 5: Modify Parameters (Hyperparameter Tuning)

With proper tuning, your LLM will train faster, generalize better, and perform more accurately on its assigned custom tasks.

Hyperparameter tuning is the process of systematically adjusting the configuration settings that control how a machine learning model learns during training. Unlike model parameters (like weights and biases, which are learned from data), hyperparameters are set manually before training begins.

When training LLMs, tuning hyperparameters is crucial to getting better performance, especially on custom or domain-specific datasets.

Since LLMs are incredibly sensitive to how they’re trained, even small changes in hyperparameters can lead to noticeable differences in output quality or model accuracy. Once you determine the correct hyperparameters, you can:

- Speed up convergence

- Prevent overfitting or underfitting

- Improve generalization to unseen text

- Reduce training time and cost

Hyperparameters you can tweak include:

- Learning Rate: Controls how much model weights are adjusted during backpropagation.

- Batch Size: Number of samples processed before the model updates weights.

- Number of Epochs: How many times the model sees the entire training dataset.

- Weight Decay: L2 regularization that penalizes large weights.

- Dropout Rate: The probability of dropping a unit during training to help prevent overfitting.

- Warmup Steps: Gradually increases the learning rate from zero to its target value over these steps.

- Gradient Clipping: Prevents exploding gradients by capping the norm of the gradient vector.

- Sequence Length: Defines how many tokens the model can handle at once.

You can use tools like Weights & Biases, Optuna, or Ray Tune to automate tuning. It’s a good idea to not try to tune everything at once, which can muddle your results. Instead, it can be better to prioritize by learning rate, batch size, and epochs first, then work your way down.

It’s also helpful to evaluate your model using metrics like perplexity, BLEU score, or custom task-based metrics. It’s also a good idea to use a validation dataset to avoid overfitting:

python

# Example of evaluating perplexity

import torch

import math

loss = trainer.evaluate()["eval_loss"]

perplexity = math.exp(loss)

Final Thoughts

Training an LLM on your own data is no longer the exclusive domain of large research teams. With open-source tools, pre-trained models, and cloud-based compute, individual developers and small teams can create powerful, domain-specific LLMs.

If you’re exploring how to use LLMs to enhance your product or automate domain-specific tasks, this process can be your foundation.

Content from the Library

How Data Serialization Improves AI Token Economics

How Better Data Formatting Affects Dollars and Cents in AI Text-based large language models (LLMs) process whatever text a user...

How to Approach Multi-Agent Frameworks

What Goes Into Multi-Agent Orchestration AI agents are autonomous systems which take action independent of regular human...