How to Approach Multi-Agent Frameworks

HeavybitHeavybit

HeavybitHeavybit

What Goes Into Multi-Agent Orchestration

AI agents are autonomous systems which take action independent of regular human intervention. AI startups have been exploring agentic functionality for some time by way of agentic workflows that utilize agents to sequentially execute steps in a pre-defined process.

However, to scale their AI programs, organizations are experimenting with agentic orchestration, the process of managing multiple agents as each one executes its tasks. As one founder puts it, having multiple agents under the hood may turn engineering into a multiplayer discipline that doesn’t just emphasize human-to-human collaboration, but also requires simultaneous human-in-the-loop oversight of AI agents for a variety of use cases.

We discussed the potential of multi-agentic orchestration with researcher Salah Alzu’bi, who currently works on the open-source orchestration project ROMA.

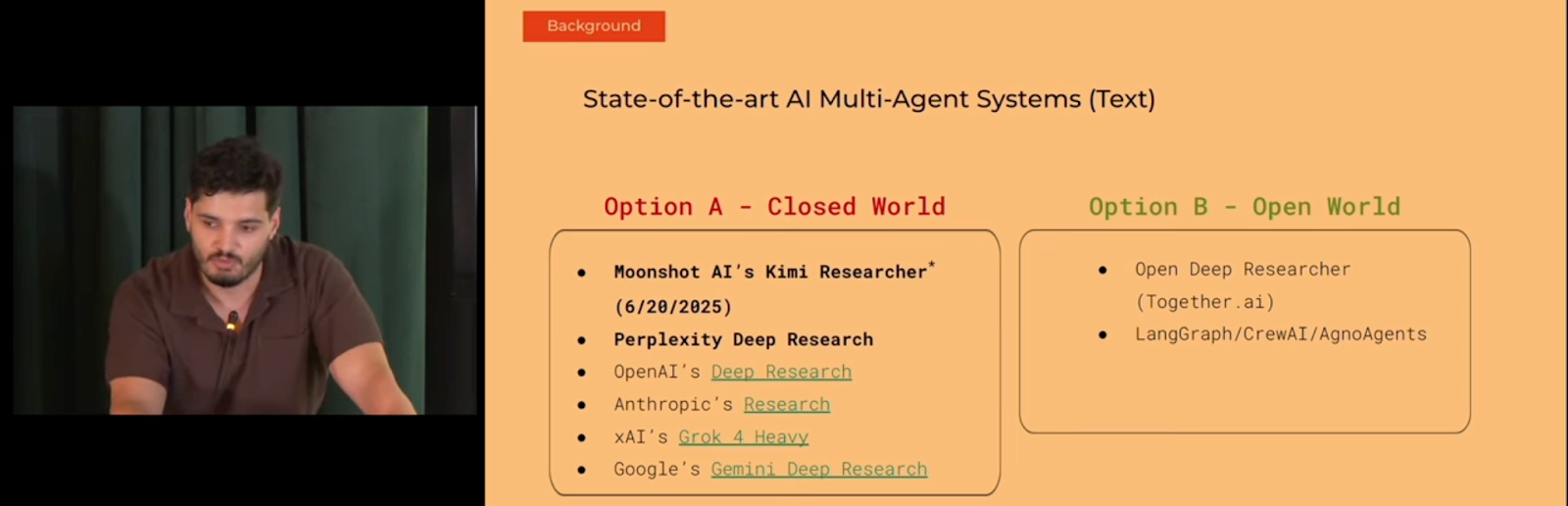

Researcher Salah Alzu’bi discusses multi-agent systems. Image courtesy Open AGI Summit.

Approaching Multi-Agent Architecture

Alzu’bi explains that the project arose from a need to provide a common framework for multi-agent communication. “For example, deep research is a common multi-agent use case. And one project might take the approach of having a search agent that grabs a few queries, then extracts information, then has a verifier tell it what it’s missing, and loops again, while another repo might have a different system entirely.”

Alzu’bi suggests the ROMA project was born in part from the need to benchmark which frameworks had better performance and scaled better. To do so, the team needed to choose how it would assign tasks to agents for execution, which include common strategies like:

Flat Decomposition

Operating agents at the same level of abstraction without any hierarchical separation. Flat decomposition systems are used in distributed sensor networks and swarm robotics as they benefit from greater flexibility and generally not having a single point of failure. However, they’ll often have higher overhead for coordination.

Graph-Based Decomposition

A system that represents tasks and dependencies as a graph, within which agents handle nodes or subgraphs. Graph-based is used in task-and-motion-planning systems for robots as they can support complex dependencies and are a good fit for distributed optimization. However, graph-based systems tend to require in-depth dependency tracking.

Plan-/Goal-Based Decomposition

This system focuses on agents achieving goals rather than pre-defined subtasks, breaking down high-level goals into plans. It’s used in goal-conditioned reinforcement learning as it can adapt well to changing environments, but can require significant planning overhead and strong reasoning capabilities.

Hierarchical Decomposition

A hierarchical system breaks down tasks into a clearly-defined, tree-like structure of subtasks. This system is used in areas like automated workflows and human-AI collaboration as it can scale better and offer improved reusability, though it can have issues with improper task specification if agents get confused about their precise role.

The team settled on a hierarchical decomposition, utilizing a framework specifically designed to avoid task specification issues by breaking subtasks into three clear categories: Think, write, or search. “The idea is to use a meta-agent framework with recursive recursive hierarchical decomposition, which is what we feel is the most natural, intuitive way to think of these multi-agent systems.”

Architecture Choices: Context Rot, Parallelization, Hierarchy

“We were mostly inspired by Professor Jürgen Schmidhuber’s paper on recursive decomposition for long-form generation. The idea is to recursively break down tasks, which is a very natural way to think about executing tasks in sequence.” The researcher offers an analogy to hierarchical delegation within companies: CEOs toss an important contract to VPs, who break down the project into subtasks for different teams, though the approach has other technical benefits.

Pioneering AI researcher Jürgen Schmidhuber at the 2017 AI for Good conference. Image courtesy ITU.

“Another big problem that hierarchical decomposition addresses is context rot, the degraded LLM performance we see when the context size gets very big. Context rot can cause agents to stop performing even simple tasks well.” While techniques like context compaction (which condenses older parts of an interaction to improve performance) have emerged, Alzu’bi suggests that hierarchical may be one of the clearest solutions to the problem.

“Having a hierarchical structure allows for the root task to break things down. And then, each of these little subtasks doesn't even need to know what's above: It has the parent orchestrating that stuff for them. So all [individual agents] need to do is basically just keep solving the task at hand.” The researcher suggests that the approach helps local agents solve problems without a great need for observability and without a significant risk of rot.

“In particular, parallelization can solve things really well. You don't need to wait for sequential processing of tasks. For example, if you ask a multi-agent system to map a city, you can break that down into different regions. You don’t need to wait for one neighborhood to finish before starting the next — each agent can map its own area in parallel. Then at the end, the parent agent looks at all the regional maps, makes sense of them, and produces a complete city map.”

Finally, the researcher explains his team’s deliberate choice to not treat every task equally. “Not many multi-agent systems seem to do this today, but you don't really need a state of the art model attacking all your tasks at any given moment. For example, you can use a very small model that performs simple tasks very well to save cost and time (because latency can also be a factor). It’s about customizing different models and agents to do particular things within an intuitive framework.”

How to Think About Multi-Agent for Startups vs. Enterprises

The researcher suggests that multi-agent is still new enough to offer a variety of opportunities, though an interesting area for upside is going beyond single-purpose AI products like coding assistants. “I think of what we’re working on as a framework to quickly prototype, or even ‘vibe prompt,’ if you want to call it that, a high-performance multi-agent system for a particular set of tasks that might include search or knowledge retrieval. Just spin it up, change a few prompts, and you have your agent.”

However, building multi-agent for enterprises can bring other challenges, including the need for on-prem security and compatibility with a variety of models. “We designed our project to let you use whatever models you want for whichever use case within a certain budget, which is something people care more about at the enterprise level.”

The researcher notes that some customers may be sensitive to other operational factors like token budgets and latency. “We built our project to let users fix their budget and latency to desired levels, which we think helps solve some of those problems for enterprises with a preference for on-prem security and flexibility around model choices.”

How do organizations know when they’re ready for multi-agent? Or alternatively, can they get by with a simpler, single-agent workflow solution? “Every organization will need to do the validation themselves and think about the trade-offs.”

"Organizations should have their own internal evaluation suite of benchmarks they run their models against.” -Salah Alzu’bi, AI Researcher/Sentient Foundation

For example, single-agent workflow setups can offer faster, simpler deployments, lower overhead for deployment and maintenance, and less operational risk. However, they will hit context limits on larger tasks faster, will require execution that remains rigid and serialized, and perform poorly as tasks grow in complexity.

Multi-agent workflow setups can be more complicated to deploy, demonstrate noticeably higher latency, and incur higher risks at launch due to the additional components. But they can also handle significantly more-complex challenges with longer context windows, and they can evolve more easily over time by swapping in new or upgraded models and agents.

Alzu’bi notes that most enterprises he works with currently seem to focus on an expected set of metrics. “You have metrics like latency, costs, and model performance in general, as well as user satisfaction. But organizations should have their own internal evaluation suite of benchmarks they run their models against.”

Security, Governance, and the Future

At minimum, before implementing a multi-agent framework, the researcher recommends at least a few common-sense security and governance measures. “You don’t want your LLMs having access to your API keys, your passwords, or any of that. A simple prompt injection can leak everything.”

“I would also recommend an internal safety alignment check. At minimum, come up with a list of the most common prompt injection or security threats that LLMs are known to suffer from and test against them. Obviously, this is an area that’s still under development and there are new threats every day, but a good rule of thumb is: Minimize exposure. Don’t give LLMs access to things you care too much about.”

“At this time, I would also recommend human oversight with a lot of security checks. There was an incident a little while ago with an AI app deleting a user’s production database. I wouldn't deploy multi-agent systems to high-stakes environments with access to people’s bank accounts yet...not until we fully understand what the risks are.”

Content from the Library

How to Properly Scope and Evolve Data Pipelines

For Data Pipelines, Planning Matters. So Does Evolution. A data pipeline is a set of processes that extracts, transforms, and...

Data Renegades Ep. #8, One Human Plus Agents with Scott Breitenother

On episode 8 of Data Renegades, CL Kao and Dori Wilson sit down with Scott Breitenother to explore how AI is reshaping the modern...

Open Source Ready Ep. #31, Developer-First Data Engineering with dltHub

In episode 31 of Open Source Ready, Brian and John sit down with Matthaus Krzykowski, Thierry Jean, and Elvis Kahoro to explore...