How Data Serialization Improves AI Token Economics

HeavybitHeavybit

HeavybitHeavybit

How Better Data Formatting Affects Dollars and Cents in AI

Text-based large language models (LLMs) process whatever text a user inputs as a prompt in token-based intervals. Each token is effectively a text-based unit of measurement (not necessarily an individual word) for how much context a model must process in a prompt to generate a response.

“What’s the best way to debug this recurring 500 error when customers place orders in my e-commerce system that includes inventory management, order processing, and payment processing apps?” has significantly more tokens to process than “What’s a good TV show on Thursdays?”

Longer queries with more text mean higher risk of context rot, a reduction in response quality as the context window gets larger and larger. They also mean incurring higher token costs to process prompts that are longer, potentially not efficiently optimized, or request complex multi-step tasks.

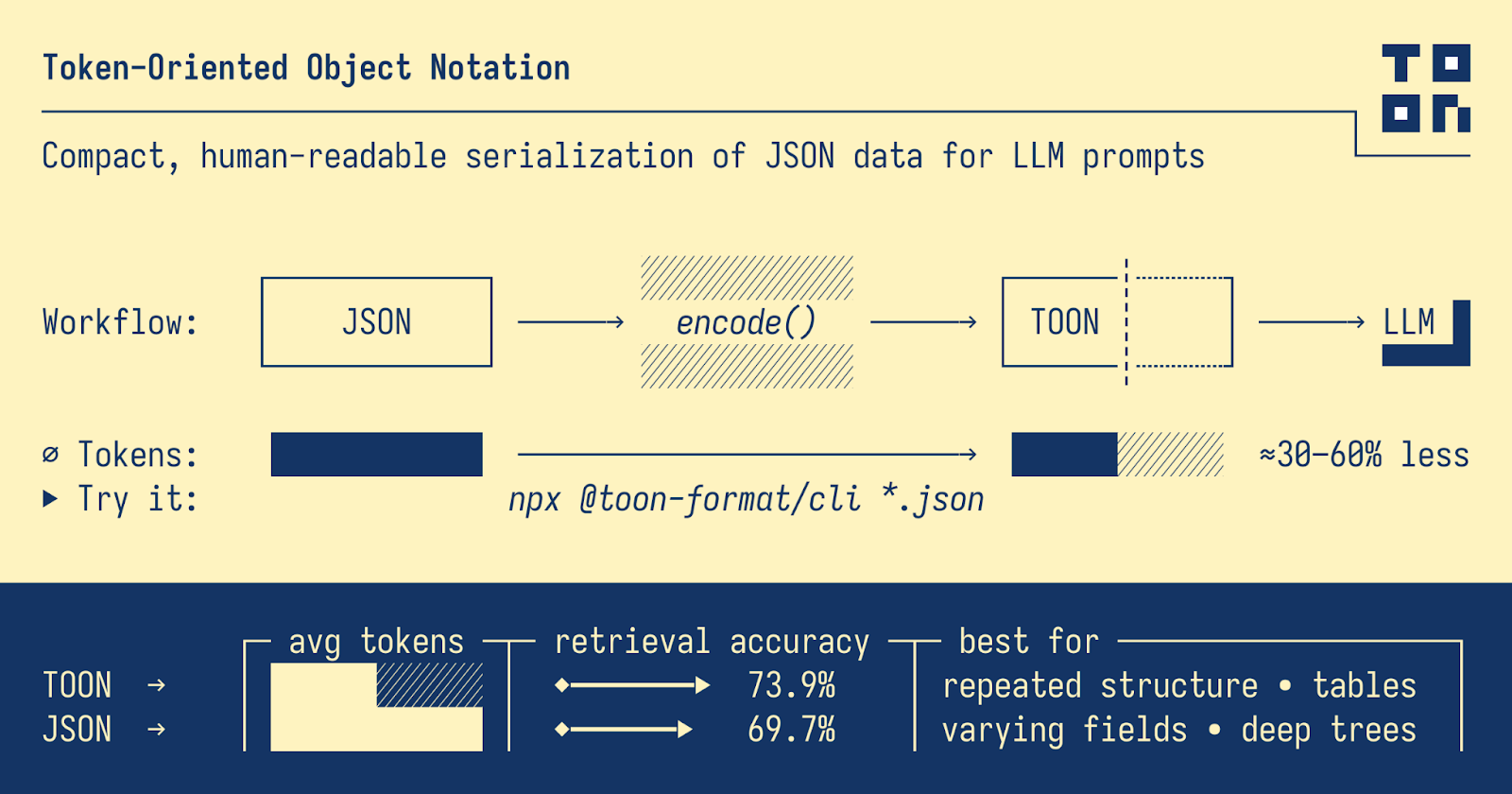

Vendors and researchers continue to work on making AI model operations cheaper by streamlining hardware-based compute usage for processes like training, fine-tuning, and inference. But there are real gains to be made on the prompting side as well. The popular open-source TOON project encodes the JSON data model in a more-compact text format that’s readable for humans and LLMs and efficiently conserves token budgets.

However, there’s more to building good AI applications than pinching pennies on tokens, as creator and self-taught engineer Johann Schopplich explains.

TOON is a data serialization format that’s human- and LLM-readable. Image courtesy Johann Schopplich and GitHub

Making JSON Human-Readable (and LLM-Readable)

The TOON project came from a need to conserve tokens for an extremely specific, text-heavy use case that required interfacing with human editors as well as with LLMs. Specifically, Schopplich’s team at the time needed to manage a daily stream of news updates for an economic and political podcast.

The creator explains, “CSV tables are pretty efficient and LLMs are trained on them, so in terms of efficiency, you can’t really top them. But we had a very specific use case for a media company that needed to read different RSS feeds from around the world. We needed to give the editorial team the scraped content from the feeds, and while we always had the idea of the team prompting with AI, we wanted to inject our aggregated data into the prompt first.”

“That’s why raw JSON wasn’t really an option. It wasn’t readable for newsroom editors, and it wasn’t really token-efficient, because JSON often repeats the same keys over and over. CSV tables are compact but flat-only and don’t handle nested data. YAML is human-friendly, but its flexibility and looser structure make it harder to validate and less predictable for LLM prompts.”

“So, keeping JSON’s data model, but designing a more-compact, deterministic representation on top of it, made the most sense.” TOON emerged as a way to both conserve tokens while also being workable for a non-technical editorial team.

In addition to saving tokens and being readable for a wider audience, TOON’s headers act as a kind of guardrail for LLM processing. “I think that’s another key feature: It’s token-efficient for tabular data. Like CSV, TOON declares field names in a header row. But it also adds an explicit length header for the number of rows.”

Providing both a field list and a numbered length header gives LLMs the ability to understand how many columns and rows are in a table, which would let a team quickly and accurately gauge how many news stories came in from specific regions, for example. The creator notes that in benchmarks across 11 datasets and 4 LLMs, TOON achieved about 73.9% accuracy versus JSON’s 69.7%, while using roughly 40% fewer tokens.

Extending JSON-Based Serialization Beyond Prompting

“The idea was not necessarily to create something completely new that LLMs don't understand. That's why it borrows syntax from YAML and the CSV-esque header structure. It feels familiar for LLMs and often performs best when you give [your LLM] a short primer.” The creator notes that there are already official and community implementations in TypeScript/JavaScript, Python, Rust, Go, Java, .NET, Dart, and more.

“If people use the encoder for the [programming] language they are most familiar with, then they can provide an encoder for non-technical people, so to speak. The structure of TOON is pretty readable; once a non-technical viewer glances at it, they can usually understand the structure.”

Schopplich notes that the project is apparently providing gains in unexpected ways. “Some people have reached out to me about how they store TOON instead of JSON in their vector database, saving space (and later token cost) when they re-inject that data into LLMs. It’s not what the project was intended for, but why not? I’ve also seen people replacing their JSON encoding function to just TOON encoding. That was another idea behind the project: To be a drop-in replacement for the JSON you’d otherwise send to LLMs.”

“If you have data in your storage and you retrieve it, and send this data encoded to your LLM, you don't have to think about which parts of the data should be in YAML, and which should be in tabular CSV format. You can just use the TOON encoder and you are pretty much good to go. That's also one of the main ideas for the project: To keep the holistic structure that JSON provides, just in a different format.”

Token reduction is one thing, but accuracy of data retrieval is another.” -Johann Schopplich, Creator/TOON

How AI Startup Founders Should Think About Token Economics

In terms of understanding token economics for startups, Schopplich concedes that founder life isn’t easy. Yes, cash-strapped startup founders, concerned about dwindling runway, may fixate on their product’s token economics, but it could be at the overall cost of product quality and overall user experience.

“Token reduction is one thing, but accuracy of data retrieval is another. Or, maybe we can reduce a piece of content into very small tokens, but the LLM takes a lot of time to process it [introducing unexpected latency]. One of the goals of the TOON project was to not compromise on accuracy. The accuracy per 1,000 tokens is actually on par with JSON, if not better because we have the length header as a guardrail.”

“So by all means, benchmark end-to-end. But not just the tokens you save. Also measure Time to First Tokens (TTFT): How long it takes for the LLM to start responding at all.” In other words, cost-conscious founders who overindex on minimizing token costs to save budget may end up with a product that is so laggy, or so inaccurate, or both, that no customer even wants it, regardless.

Content from the Library

Combining Context Engineering With Lightweight Compute

Making Semantic Text Management Lightweight Much has been written about the ability of large language models (LLMs) to spin up...

How to Approach Multi-Agent Frameworks

What Goes Into Multi-Agent Orchestration AI agents are autonomous systems which take action independent of regular human...

How to Train an LLM on Your Own Data: Beginner’s Guide

Introduction to Training an LLM Organizations of all sizes and maturity levels are adopting artificial intelligence (AI)...